- 710

Integrate Local LLMs with n8n and Ollama for Chat Solutions

Enhance interactions with local LLMs; automate workflows and streamline communication using n8n and Ollama in this template.

Enhance interactions with local LLMs; automate workflows and streamline communication using n8n and Ollama in this template.

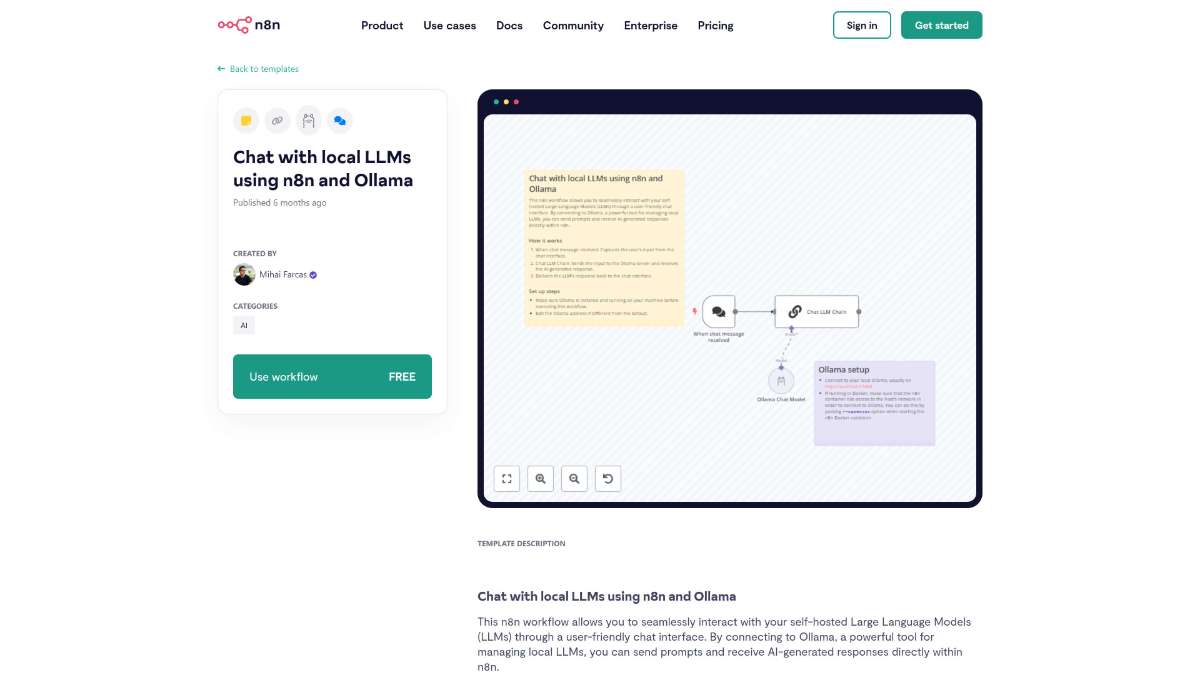

Who is this workflow for? This n8n workflow enables seamless interaction with your self-hosted Large Language Models (LLMs) through an intuitive chat interface. By integrating with Ollama, a robust tool for managing local LLMs, you can send prompts and receive AI-generated responses directly within n8n, ensuring efficient and private AI communications..

This workflow is ideal for developers, data scientists, and businesses looking to implement secure, cost-effective AI solutions. It caters to those who require private interactions with AI models and prefer managing their computational resources independently.

This n8n workflow provides a streamlined approach to interact with local Large Language Models using Ollama. It ensures data privacy, reduces costs, and offers a flexible environment for developing and experimenting with AI applications. By integrating these tools, users can effectively manage and utilize their own LLMs within a user-friendly chat interface.

Setup Steps

Integrations

Conclusion

Leverage this n8n workflow to harness the power of local LLMs with Ollama, achieving secure, cost-effective, and flexible AI interactions tailored to your specific requirements.

Automate saving email attachments to Nextcloud. Benefit from seamless integration, time-saving processes, and efficient file management.

Streamline billing by generating invoices from Typeform data. Automate tasks and reduce manual errors with this efficient n8n workflow template.

Simplify data retrieval from Monday.com with a single n8n node, ensuring efficient integration and comprehensive data access.

Help us find the best n8n templates

A curated directory of the best n8n templates for workflow automations.