- 799

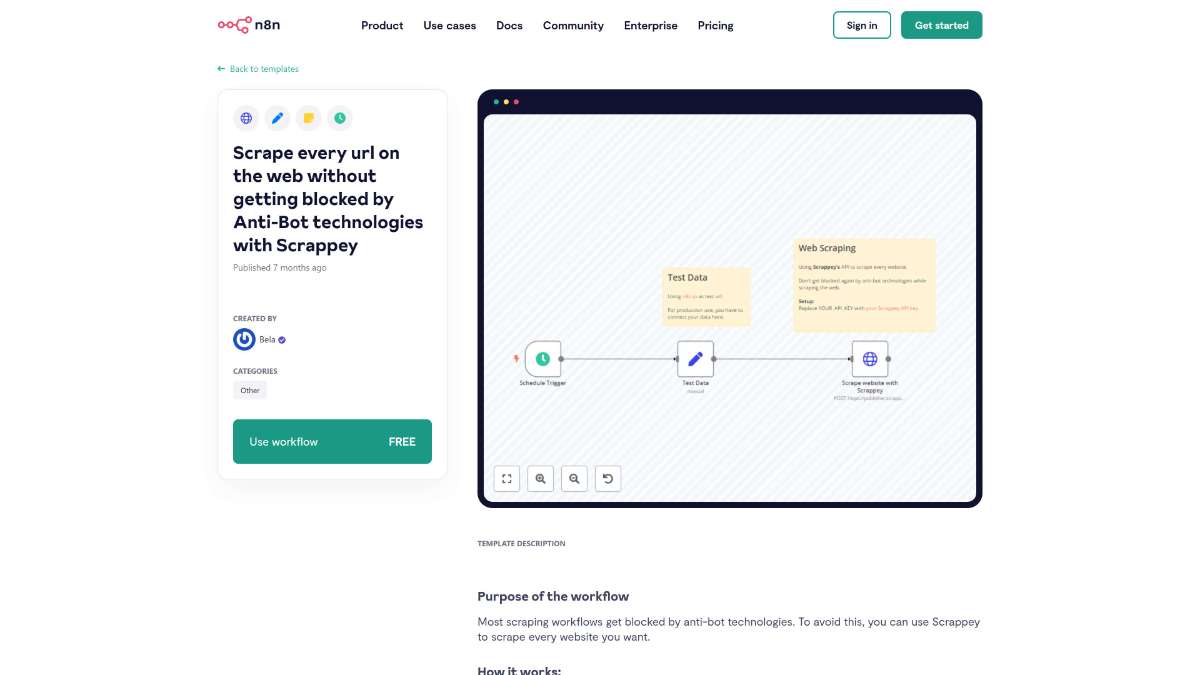

Automated Website Scraping Without Detection Using Scrappey and n8n

Discover how to scrape websites undetected with Scrappey and n8n. Automate data collection and ensure privacy with this powerful integration.

Discover how to scrape websites undetected with Scrappey and n8n. Automate data collection and ensure privacy with this powerful integration.

Who is this workflow for? This workflow enables you to scrape any website seamlessly without being hindered by anti-bot technologies. Leveraging Scrappey’s robust capabilities within the n8n automation platform, you can extract data from diverse websites reliably and efficiently..

YOUR_API_KEY with your actual Scrappey API key obtained from Scrappey.This workflow is ideal for data analysts, digital marketers, researchers, and developers who need to collect data from multiple websites regularly without facing blocking issues. It caters to both technical and non-technical users seeking an automated scraping solution.

This n8n workflow, powered by Scrappey, provides a robust solution for scraping any website without encountering anti-bot blocks. By automating the data extraction and integration process, it enables efficient and scalable data collection tailored to your specific needs.

Streamline music sharing by connecting Telegram to Spotify with OpenAI in n8n. Automate tasks and enhance productivity effortlessly.

Enhance images using the FLUX.1 fill tool for inpainting. Improve efficiency with interactive editing and advanced image restoration capabilities.

Streamline GitLab merge requests, enhance efficiency, and utilize API workflows with ease using this n8n template.

Help us find the best n8n templates

A curated directory of the best n8n templates for workflow automations.